Schedule a demo of Alkymi

Interested in learning how Alkymi can help you go from unstructured data to instantly actionable insights? Schedule a personalized product demo with our team today!

Data Science Room

Approaches to increase confidence in content generated by large language models

A crucial aspect of machine learning systems is having confidence in the output of the models. Confidence enables us to trust that model outputs are reliable and to use those outputs in downstream applications and for decision-making. However, it is well known that large language models (LLMs) sometimes hallucinate the answers to questions, which creates uncertainty about their answers and their utility. This white paper discusses some of the approaches that can be used to elicit confidence in LLM outputs and discusses some of the ways we build confidence in model outputs at Alkymi.

Note: This white paper deals with the issue of confidence in LLM outputs. Confidence is one of the components of trust in machine learning. Other components include bias, stereotypes, ethics, privacy, confidence, and fairness (Wang et al., 2023). While this white paper only focuses on whether we can be confident that the answers provided by an LLM are factually correct, at Alkymi we acknowledge the importance of the other aspects of trust and continue to focus on improving trust of all types in our machine learning models.

Large language models (LLMs) have demonstrated their ability to complete complex natural language understanding tasks, e.g., translation and summarization, despite not explicitly being trained to perform those tasks (Radford et al., 2023; Wei et al., 2022). LLMs typically perform inference in 3 common settings:

Despite the impressive performance of LLMs on many downstream tasks in each of these inference settings, there is still significant concern about the possibility of LLMs hallucinating answers to questions. This should occur less frequently in the fine-tuning and in-context learning settings as the model is more familiar with the downstream tasks; however, there is no guarantee that hallucinations will not happen. Previous research has identified 3 main types of hallucination for LLMs (Zhang et al., 2023):

Hallucination is an important and active research area for LLMs (Zhang et al., 2023). At Alkymi, we apply several measures to minimize the risk of hallucinations when using our LLM-powered tools. The main issue that arises due to hallucinations is that they degrade trust in LLM outputs. In addition to hallucinations, LLMs are black boxes, where we don’t fully understand their internal working and reasoning, and thus it can be challenging to explain how they arrive at a given answer.

Building confidence in LLM output is important as it allows us to trust them, despite their black box nature and the fact that they occasionally hallucinate.

There are different ways that one could build confidence in LLM outputs. In this white paper, we discuss three: model explainability, confidence scoring, and attribution. We then discuss some practical implications and describe how we build confidence in LLM-powered tools at Alkymi.

Model explainability in machine learning refers to mechanisms that can be used to explain model outputs to build trust and confidence (1) (Zhao et al., 2023). There has been significant research on explainability in machine learning and natural language processing (Zhao et al., 2023; Zini & Awad, 2022; Danilevsky et al., 2020), and explainability is crucial when using machine learning in domains, such as medicine, law, and finance, where being able to document the reasons for decisions is important.

Explainability in NLP can act as a debugging aid when analyzing LLM output and can also be used to measure improvements in models (Zhao et al., 2023). Some traditional machine learning models often make use of hand-designed features, such as decision trees and logistic regression models. These models can sometimes be explained by analyzing split criteria or feature weights, which can provide insight into the importance of certain features in model decisions. However, LLMs and deep neural networks typically do not use hand-crafted features but learn them automatically from the data, thus making them harder to interpret and explain. This has led to the development of other explanation methods that attempt to pry open the black box, such as perturbation methods, which slightly modify the model input and observe what happens to the output; or by visualizing model attention (Vig, 2023). Other approaches make use of surrogate models, which are simpler and more explainable models that attempt to emulate the decisions of complex large models (Zhao et al., 2023).

The key idea behind model explainability is that the goal is to explain how a model came to an answer.

An alternative to model explainability is confidence scoring. In this approach, the goal is to produce a score that quantifies the extent to which you should trust the LLM’s output. When the model confidence is high then the answer can be trusted, and when the model confidence is low, further investigation is warranted. A requirement for this, however, is that model confidence is well-calibrated with model accuracy (Wightman et al., 2023) so that model confidence can be trusted as a predictor of answer quality.

One way to measure model confidence is with output token probabilities; however, this is an unreliable predictor of confidence for LLMs, especially closed-source LLMs (Xiong et al., 2023), thus necessitating the need for other approaches. Another approach involves training LLMs to generate natural language explanations for their reasoning (Rajani et al., 2019). This approach might not only help with explainability but could potentially also reduce hallucinations because the models are required to give explanations, which requires them to be more truthful.

Given the ability of LLMs to perform well at a variety of tasks, one might wonder if it’s possible to have an LLM explain its output. Lin et al. (2022) demonstrated that GPT-3 was capable of reliably demonstrating its confidence for math problems, though its performance on more generic question answering (for instance, over financial documents), was left as an open research question.

Finally, other approaches focus on prompting models multiple times with variants of a prompt and using the similarities among responses as an estimator of confidence (Wightman et al., 2023).

Attribution for LLMs is related to explainability; however, instead of focusing on why an LLM made a decision based on its underlying beliefs, the task instead is to provide the source for the decision (Bohnet et al., 2023). In the context of the document Question Answering (QA), the requirement for attribution is that the LLM shows where in a document (or in a collection of documents) it found an answer. Attribution for QA is challenging for several reasons. For instance, there could be multiple sources in a document that answer a specific question. This makes automatically evaluating the attribution capabilities of LLMs challenging, because it usually requires one to identify all possible sources and label them appropriately to perform a robust evaluation (2). Human evaluation of an answer and its source(s) has been proposed as the gold standard for evaluation, since it only requires a human to answer the question of whether the answer is supported by the source (Bohnet et al., 2023). Automatic methods have also been proposed, whereby another LLM is used to score whether the answer to a question is supported by a source (Bohnet et al., 2023). Another challenge for Attribution for QA is when an answer is a synthesis of information from multiple sources. In this case, all of those sources need to be identified and cited appropriately (Bohnet et al., 2023).

There are several methods proposed for attribution in LLMs. One method is known as retrieve-then-read. In this approach, a retrieval system retrieves passages in a database for a given question and then ranks them. An answer is generated from the highest ranked passage and, because the passage that generated the answer is known, that answer can be attributed to that passage (Izacard et al., 2022). This approach can be used with Retrieval Augmented Generation systems, but the utility may be dependent on the size of the chunks retrieved (3). An alternative to retrieve-then-read is post-hoc retrieval. In this setting, the answer to a question is first generated, and then a retrieval system is used to retrieve supporting facts. Another option is the LLM-as-a-retriever approach, where an LLM is trained to answer questions and perform retrieval, which can be used for attribution (Tay et al., 2023). A full discussion and evaluation of these different approaches is available in Bohnet et al. (2023).

The main goal of attribution is to build confidence by allowing an LLM user to see the support for an answer without needing to check it independently (Bohnet et al., 2023). Attribution also can be used for auditing purposes.

(1) In some contexts, explainability refers to all mechanisms that can be used to describe the reasons for model outputs. In this white paper, we use explainability to refer only to the underlying model mechanisms that can be used to explain outputs, e.g., weights, features, etc.

(2) Typically for automatic evaluation of LLM attribution, you would want to label all possible sources for answering a question. Then, when performing automatic evaluation you would assess the extent to which all of those sources were identified.

(3) Full page sources may be less useful than smaller paragraphs for attribution purposes.

Having confidence in the outputs produced by LLMs is important, especially in financial document processing, where having an answer may not be sufficient unless the reason for the LLM producing that answer is provided. As described above, there is a large amount of research into building confidence in LLM outputs, including methods for explaining underlying model mechanisms and providing attribution for answers.

Many LLM applications require that confidence information is available at run time. Additionally, confidence information should be easily accessible and interpretable as part of the application user interface. In many cases, these requirements rule out model explainability as a method for eliciting model confidence since it usually requires one to probe the underlying model mechanisms and the interpretation may not be straightforward. Therefore, model confidence scores and attribution may be more appropriate for eliciting model confidence in LLM applications.

For model confidence scores and attribution, the reasons for the model confidence must be interpretable to the user. For model confidence scores, this means providing evidence that the model scores are well-calibrated (Wightman et al., 2023) and that models are not acting over-confident when wrong (Feng et al., 2018). In many cases, this may mean providing a natural language description for the model confidence. In practice, attribution methods have the potential to be the most useful as they have the benefit of linking directly to sources, which allows users to validate the output in real-time.

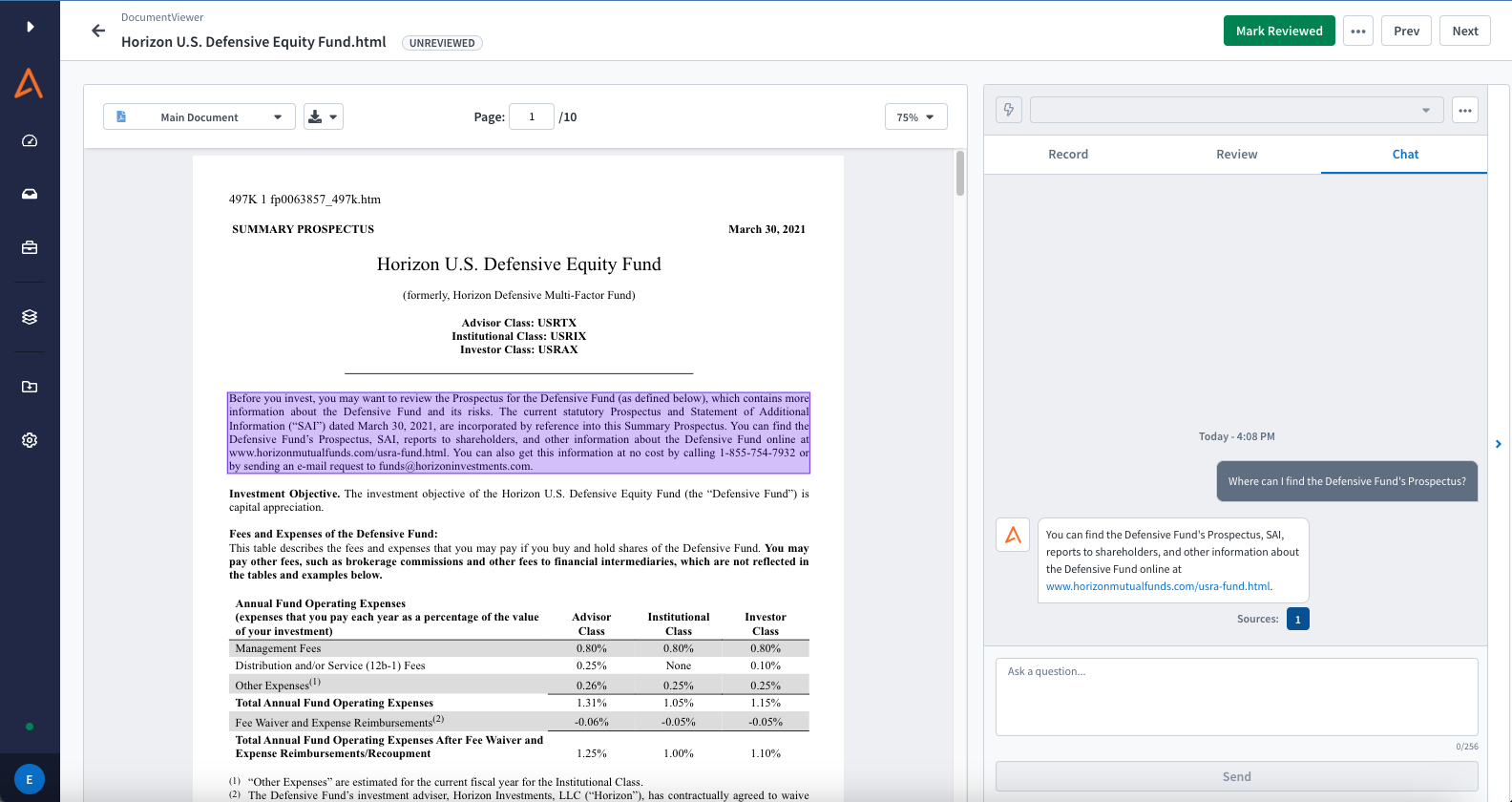

Having an audit trail for information extracted from financial documents is a core platform feature at Alkymi. Attribution is a natural fit for this since it provides a direct link between extracted information and the source in a document. Furthermore, attribution allows users to see the support for extracted information without needing to validate it independently (Bohnet et al., 2023). For these reasons, we identified attribution as the most logical and effective way to build confidence in LLM outputs at Alkymi. For all tools that perform information extraction as part of Alkymi Alpha (Answer Tool, Chat, etc.), Alkymi provides attribution information for each output through a feature known as Traceability.

Alkymi Traceability uses a model that combines our Document Object Model (an internal representation of a document’s components and layout), retrieve-then-read, post-hoc retrieval, semantic string matching, and ranking in producing high-quality attribution sources. Alkymi also fine-tunes LLMs specifically for attribution. In cases where an answer is lifted directly from a document, Alkymi links directly to that location in the document. In cases where the LLM provides a synthesis of information, Alkymi Traceability links back to the parts of the document that were used to generate that synthesis. Alkymi Traceability is LLM-agnostic, meaning that it works with commercial, self-hosted, and open-source LLMs.

Kyle Williams

Kyle Williams is a Data Scientist Manager at Alkymi. He has over 10 years of experience using data science, machine learning, and NLP to build products used by millions of users in diverse domains, such as finance, healthcare, academia, and productivity. He received his Ph.D. from The Pennsylvania State University and has published over 50 peer-reviewed papers in NLP and information retrieval.

Bohnet, B., Tran, V., Verga, P., Aharoni, R., Andor, D., Soares, L., Ciaramita, M., Eisenstein, J., Ganchev, K., Herzig, J., Hui, K., Kwiatkowski, T., Ma, J., Ni, J., Saralegui, L., Schuster, T., Cohen, W., Collins, M., Das, D., Metzler, D., Petrov, S., &Webster, K. (2022). Attributed question answering: Evaluation and modeling for attributed large language models. arXiv preprint arXiv:2212.08037.

Danilevsky, M., Qian, K., Aharonov, R., Katsis, Y., Kawas, B., & Sen, P. (2020). A Survey of the State of Explainable AI for Natural Language Processing. In Proceedings of the 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing (pp. 447-459).

Feng, S., Wallace, E., Grissom II, A., Iyyer, M., Rodriguez, P., & Boyd-Graber, J. (2018). Pathologies of Neural Models Make Interpretations Difficult. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing (pp. 3719-3728).

Izacard, G., Lewis, P., Lomeli, M., Hosseini, L., Petroni, F., Schick, T., Dwivedi-Yu, J., Joulin, A., Riedel, S., & Grave, E. (2022). Few-shot learning with retrieval augmented language models. arXiv preprint arXiv:2208.03299.

Lin, S., Hilton, J., & Evans, O. (2022). Teaching models to express their uncertainty in words. arXiv preprint arXiv:2205.14334.

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI blog, 1(8), 9.

Rajani, N. F., McCann, B., Xiong, C., & Socher, R. (2019, July). Explain Yourself! Leveraging Language Models for Commonsense Reasoning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (pp. 4932-4942).

Tay, Y., Tran, V., Dehghani, M., Ni, J., Bahri, D., Mehta, H., Qin, Z., Hui, K., Zhao, Z., Gupta, J., Schuster, T., Cohen, W. W., & Metzler, D. (2022). Transformer memory as a differentiable search index. Advances in Neural Information Processing Systems, 35, 21831-21843.

Touvron, H., Martin, L., Stone, K.R., Albert, P., Almahairi, A., Babaei, Y., Bashlykov, N., Batra, S., Bhargava, P., Bhosale, S., Bikel, D.M., Blecher, L., Ferrer, C.C., Chen, M., Cucurull, G., Esiobu, D., Fernandes, J., Fu, J., Fu, W., Fuller, B., Gao, C., Goswami, V., Goyal, N., Hartshorn, A.S., Hosseini, S., Hou, R., Inan, H., Kardas, M., Kerkez, V., Khabsa, M., Kloumann, I.M., Korenev, A.V., Koura, P.S., Lachaux, M., Lavril, T., Lee, J., Liskovich, D., Lu, Y., Mao, Y., Martinet, X., Mihaylov, T., Mishra, P., Molybog, I., Nie, Y., Poulton, A., Reizenstein, J., Rungta, R., Saladi, K., Schelten, A., Silva, R., Smith, E.M., Subramanian, R., Tan, X., Tang, B., Taylor, R., Williams, A., Kuan, J.X., Xu, P., Yan, Z., Zarov, I., Zhang, Y., Fan, A., Kambadur, M., Narang, S., Rodriguez, A., Stojnic, R., Edunov, S., & Scialom, T. (2023). Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv preprint arXiv:2307.09288.

Vig, J. (2019). A Multiscale Visualization of Attention in the Transformer Model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations (pp. 37-42).

Wang, B., Chen, W., Pei, H., Xie, C., Kang, M., Zhang, C., Xu, C., Xiong, Z., Dutta, R., Schaeffer, R., Truong, S. T., Arora, S., Mazeika, M., Hendrycks, D., Lin, Z., Cheng, Y., Koyejo, S., Song, D., & Li, B. (2023). DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models. https://www.microsoft.com/en-u...

Wei, J., Bosma, M., Zhao, V. Y., Guu, K., Yu, A. W., Lester, B., Du, N., Dai, A. M., & Le, Q. v. (2022). Fine-tuned Language Models Are Zero-Shot Learners. 10th International Conference on Learning Representations.

Wightman, G. P., DeLucia, A., & Dredze, M. (2023). Strength in Numbers: Estimating Confidence of Large Language Models by Prompt Agreement. Proceedings of the Annual Meeting of the Association for Computational Linguistics, 326–362.

Xiong, M., Hu, Z., Lu, X., Li, Y., Fu, J., He, J., & Hooi, B. (2023). Can LLMs Express Their Uncertainty? An Empirical Evaluation of Confidence Elicitation in LLMs. arXiv preprint arXiv:2306.13063.

Zhang, Y., Li, Y., Cui, L., Cai, D., Liu, L., Fu, T., Huang, X., Zhao, E., Zhang, Y., Chen, Y., Wang, L., Tuan Luu, A., Bi, W., Shi, F., Shi, S., & lab, T. A. (2023). Siren’s Song in the AI Ocean: A Survey on Hallucination in Large Language Models. arXiv preprint arXiv.2309.01219.

Zhao, H., Chen, H., Yang, F., Liu, N., Deng, H., Cai, H., Wang, S., Yin, D., & Du, M. (2023). Explainability for large language models: A survey. arXiv preprint arXiv:2309.01029.

Zini, J. el, & Awad, M. (2022). On the Explainability of Natural Language Processing Deep Models. ACM Computing Surveys, 55(5).

Interested in learning how Alkymi can help you go from unstructured data to instantly actionable insights? Schedule a personalized product demo with our team today!